Tag: Projects

-

Home Improvement Landscaping

The previous owner of our property believed in letting nature run its course entirely without human intervention. As a result our front yard had been a long outstanding project that we’d been afraid to tackle. In addition, we had some serious drainage problems that led to standing water in the driveway, and sump pumps that…

-

18 Years of Blogging

This blog has been alive in some form since 2002. As you can imagine a lot has changed in 18 years. The blog has moved physically from servers under desks, servers in closets, to finally virtual machines in the cloud. Even those VMs have changed AWS instance sizes and Ubuntu LTS versions over time. The…

-

Home Improvement Porch Project

Kat bought our house around the same time that I bought my condo in 2014, before we even met! It’s an amazing property on a large wooded lot with nice privacy and set back a bit from the street in a quiet neighborhood. The house had a great back deck with room for an outdoor…

-

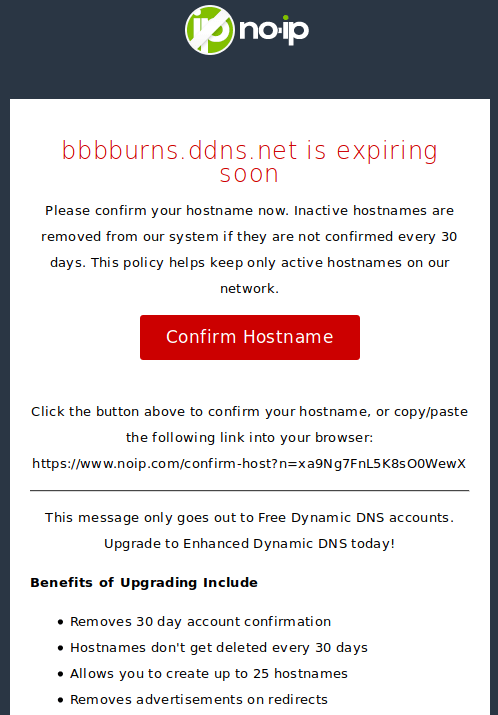

Ditching No-IP for DuckDNS

I’ve been using dynamic IP address services from no-ip.com for a long time to give a DNS name to my dynamic home IP. I remember having a no-ip.com address in college around the early 2000s for my dorm, then apartment, computer. I went through a hiatus after college where I managed my domain name to…

-

Let’s Encrypt – How do I Cron?

Let’s Encrypt was really easy to setup, but Cron was less so. I kept getting emails that the Let’s Encrypt renewal was failing: I had a cron job setup with the absolute bare minimum: When I ran at the command line, everything worked just fine. I was like, “Oh – this must be some stupid…

-

Let’s Encrypt – Easy – Free – Awesome

I recently saw a news article about StartCom being on Mozilla and Google’s naughty list. Things looked bad, and my StartCom certs were up for renewal on the blog. I have seen articles flying around about Let’s Encrypt for a while now. The idea seemed awesome, but the website seemed so light on technical instructions…

-

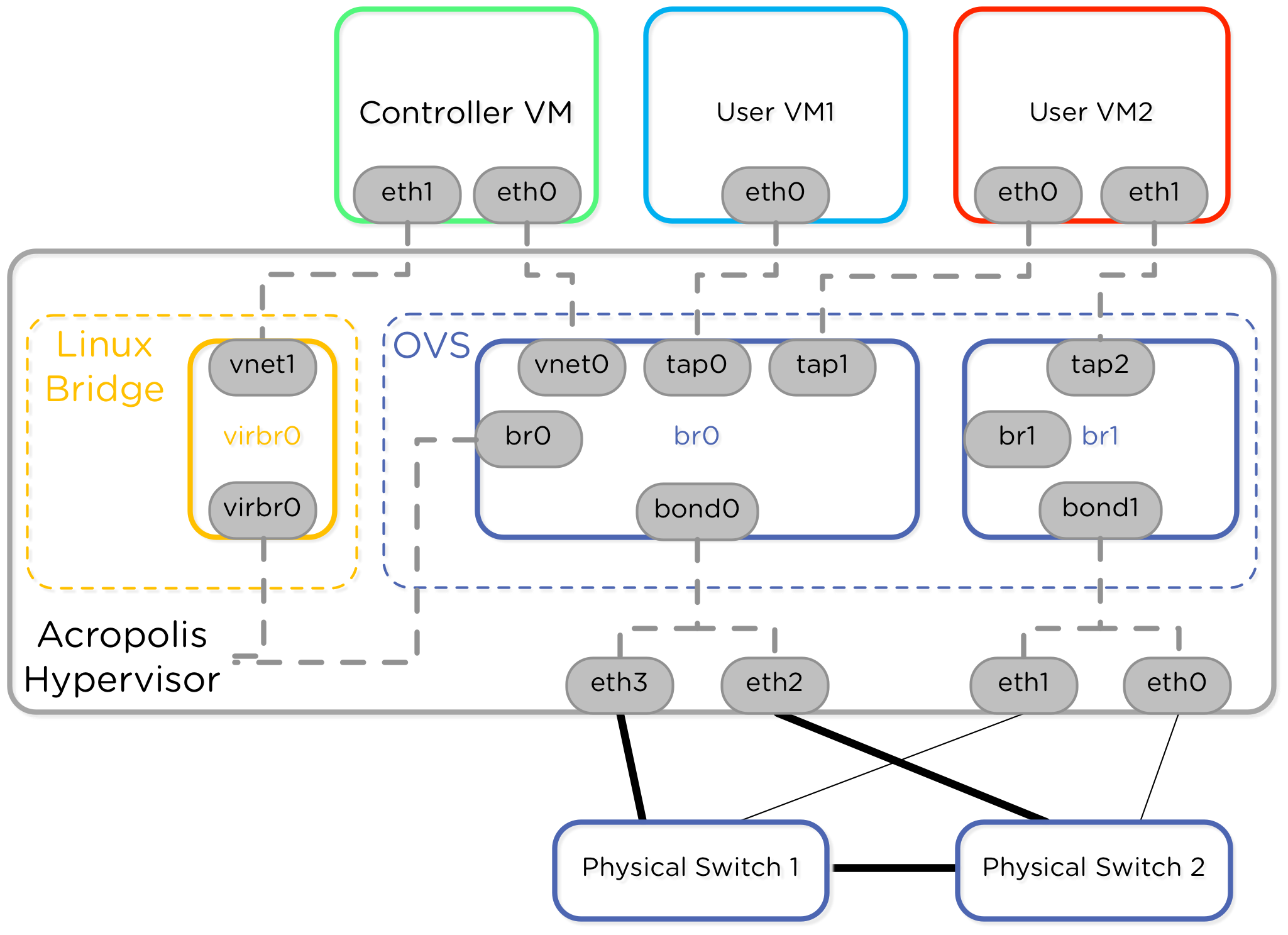

Nutanix AHV Best Practices Guide

In my last blog post I talked about networking with Open vSwitch in the Nutanix hypervisor, AHV. Today I’m happy to announce the continuation of that initial post – the Nutanix AHV Best Practices Guide. Nutanix introduced the concept of AHV, based on the open source Linux KVM hypervisor. A new Nutanix node comes installed with AHV by…

-

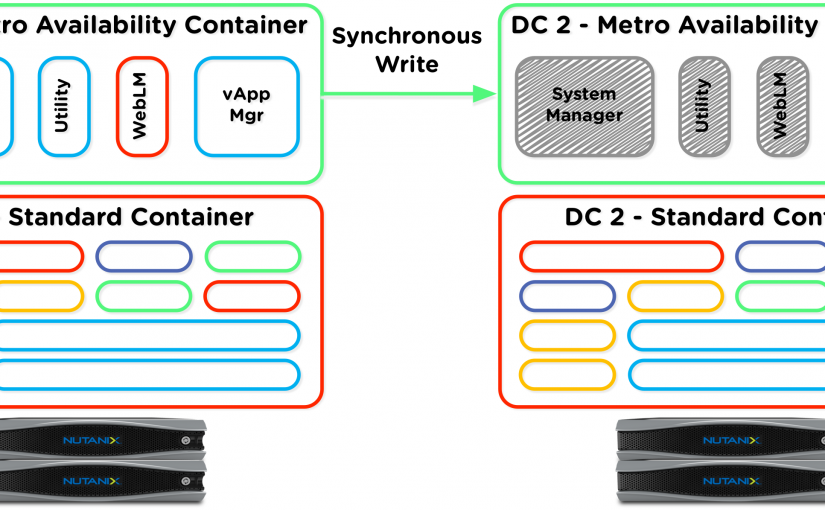

Survivable UC – Avaya Aura and Nutanix Data Protection

I wanted to share a bit of cool “value add” today, as my sales and marketing guys would call it. This is just one of the things for Avaya Aura and UC in general that a Nutanix deployment can bring to the table. Nutanix has the concept of Protection Domains and Metro Availability that have been…

-

Virtualized Avaya Aura on Nutanix – In Progress

The Avaya Technology Forum in Orlando was a great success! Thanks to everyone who attended and showed interest in Nutanix by stopping at the booth. I met a lot of interested potential customers and partners and was also able to learn more about what people are virtualizing these days. There is nothing quite like asking…

-

Nutanix and UC – Part 3: Cisco UC on Nutanix

In the previous posts we covered an Introduction to Cisco UC and Nutanix as well as Cisco’s requirements for UC virtualization. To quickly summarize… Nutanix is a virtualization platform that provides compute and storage in a way that is fault tolerant and scalable. Cisco UC provides a VMware centric virtualized VoIP collaboration suite that allows clients…