Tag: Nutanix

-

Should I Write About Nutanix Community Edition?

Would there be any interest if I made some articles or videos about Nutanix Community Edition? Right now all of the main info for it is captured in the Nutanix NEXT Community Forums. I see that Nutanix CE and the new CE 2.0 are really popular search terms. What would y’all be interested in? Would…

-

How To: Get a Job in Technical Marketing

Something different: A post about career and work instead of home improvement and travel. 😉 Thanks to my colleague Ashwini Vasanth I was able to answer these questions in her podcast: “Through The Corporate Glass – Ep 24 – The DNA of a Technical Marketing Engineer“ It was a great experience going through the interview…

-

All Things Open 2018

Last week I attended the All Things Open conference in Raleigh. This is a conference dedicated to Open Source Software, which is very different from the usual vendor led conferences I attend. It was also unusual to commute to a conference in the morning and then back home at night. Usually conference season means at least…

-

Nutanix AHV Networking – What’s New

We just made a bunch of announcements about networking enhancements to AHV, and I’ll be posting about them at Nutanix.com. Here’s an overview of the series: Part 1: AHV Network Visualization Part 2: AHV Network Automation and Integration Part 3: AHV Network Microsegmentation Part 4: AHV Network Function Chains And it wouldn’t be a blog…

-

Light Board Series: AHV Open vSwitch Networking – Part 4

Part 1 – Host Networking – Bonds Part 2 – Host Networking – Load Balancing Part 3 – AHV VLANs Part 4 – User Virtual Machine VLANs and Networking (You are here!) We’re wrapping up our four part series on Nutanix AHV networking today with a look at the User VM Networking. Check out the Nutanix…

-

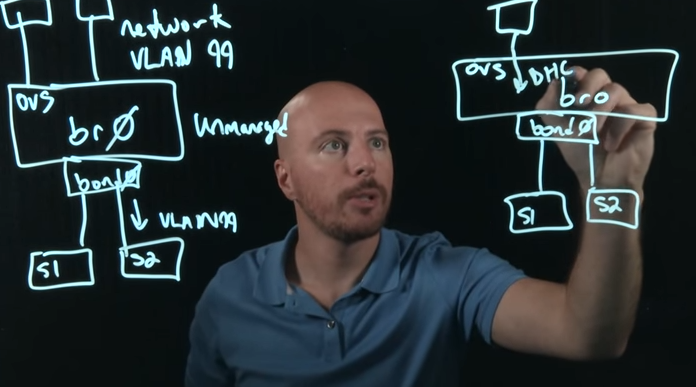

Light Board Series: AHV Open vSwitch Networking – Part 3

Part 1 – Host Networking – Bonds Part 2 – Host Networking – Load Balancing Part 3 – AHV VLANs (You are here!) Part 4 – User Virtual Machine VLANs and Networking For part 3 in our series I want to tackle VLANs in AHV. I don’t actually have a light board video for this…

-

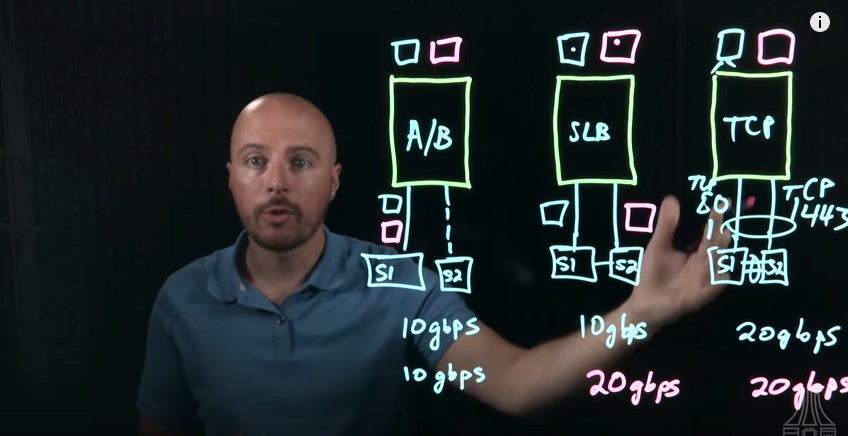

Light Board Series: AHV Open vSwitch Networking – Part 2

Part 1 – Host Networking – Bonds Part 2 – Host Networking – Load Balancing (You Are Here!) Part 3 – AHV VLANs Part 4 – User Virtual Machine VLANs and Networking In our last post we explored bridges and bonds with AHV and Open vSwitch. Today on the Nutanix .NEXT Community Blog I’m covering load balancing within…

-

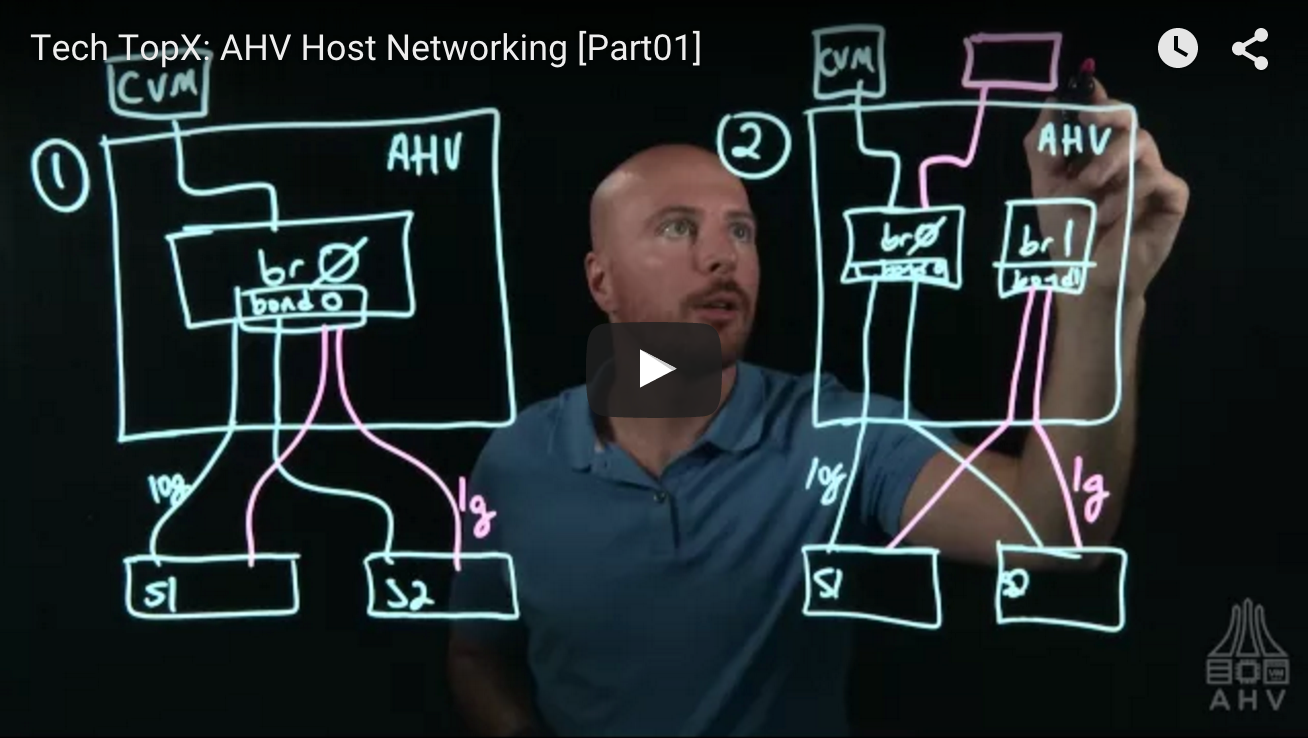

Light Board Series: AHV Open vSwitch Networking – Part 1

Part 1 – Host Networking – Bonds (You Are Here!) Part 2 – Host Networking – Load Balancing Part 3 – AHV VLANs Part 4 – User Virtual Machine VLANs and Networking I’m happy to announce the release of the first Light Board Videos I recorded with the Nutanix nu.school education team. These videos were a…

-

Nutanix AHV Best Practices Guide

In my last blog post I talked about networking with Open vSwitch in the Nutanix hypervisor, AHV. Today I’m happy to announce the continuation of that initial post – the Nutanix AHV Best Practices Guide. Nutanix introduced the concept of AHV, based on the open source Linux KVM hypervisor. A new Nutanix node comes installed with AHV by…

-

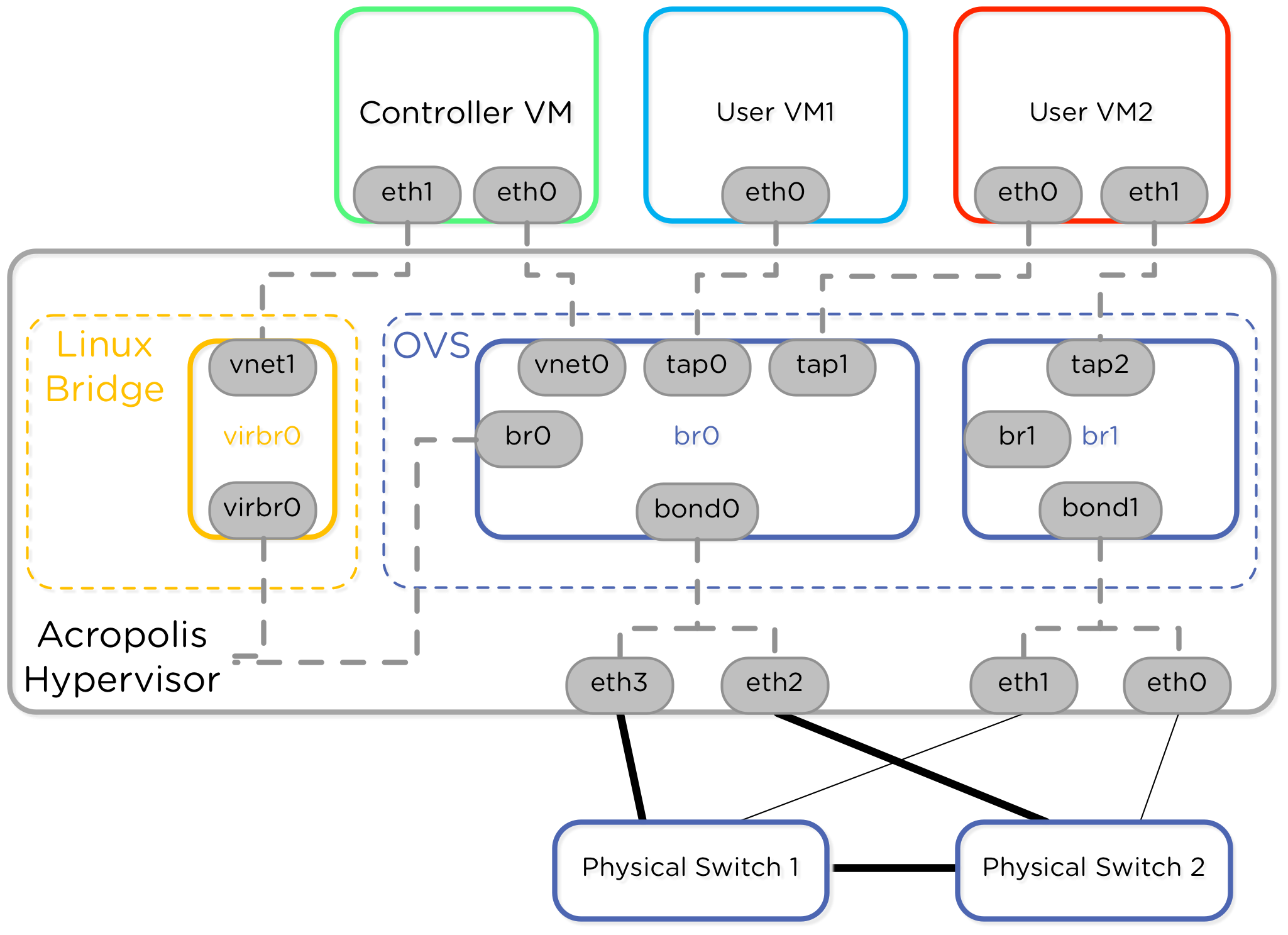

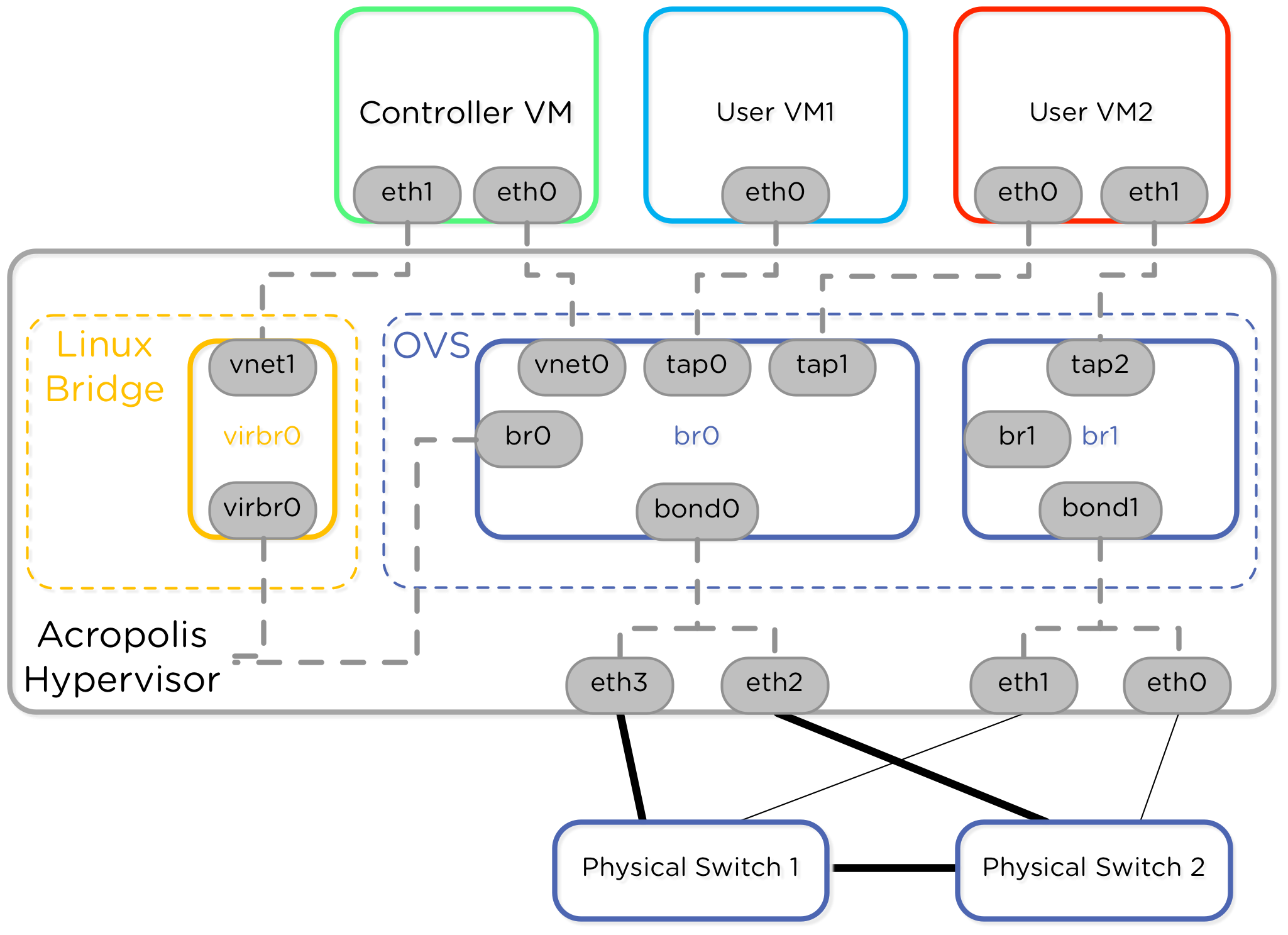

Networking Exploration in Nutanix AHV

Nutanix recently released the AHV hypervisor, which means I get a new piece of technology to learn! Before I started this blog post I had no idea how Open vSwitch worked or what KVM and QEMU were all about. Since I come from a networking background originally, I drilled down into the Open vSwitch and…